Recent advances in artificial intelligence, especially within the realm of large language models (LLMs) such as GPT-4, reveal a compelling phenomenon often dubbed the “Arrow of Time.” This bias affects language models’ ability to predict subsequent words versus previous ones, raising intriguing questions about the fundamental nature of language processing and our comprehension of temporal dynamics.

Traditional language models capitalize on a straightforward premise: predicting the next word in a sequence based on its predecessors. This methodology enables a wide array of applications, from generating coherent text to facilitating real-time translations and powering intelligent chatbots. As researchers delve further into the capabilities of these models, the concept of temporal bias emerges as a significant area of study. A recent investigation led by Professor Clément Hongler from the École Polytechnique Fédérale de Lausanne (EPFL) alongside Jérémie Wenger of Goldsmiths in London, focuses specifically on the model’s proclivity for “forward” predictions over “backward” ones.

The researchers set out to determine whether LLMs could effectively construct narratives in reverse. They hypothesized that while LLMs assist in developing coherent text, their ability to deconstruct it—understanding earlier words through those that follow—would be inherently flawed. The ongoing study involved testing various architectures, including Generative Pre-trained Transformers (GPT), Gated Recurrent Units (GRU), and Long Short-Term Memory (LSTM) networks, to dissect the intricacies of this reverse prediction phenomenon.

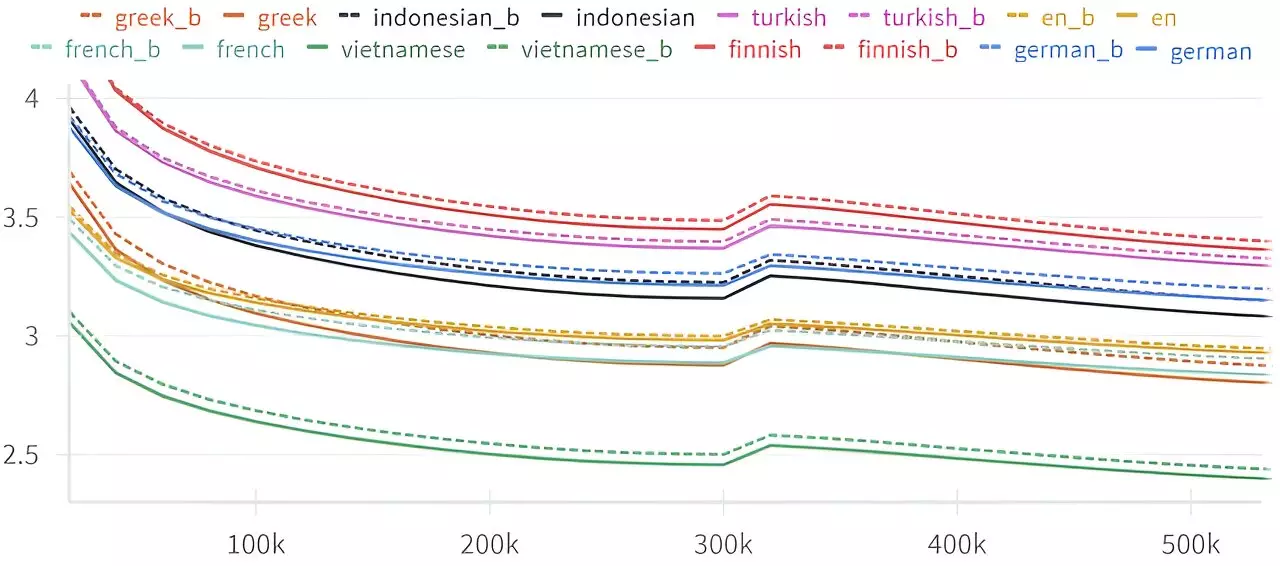

Surprisingly, all tested models, irrespective of their architecture or size, exhibited an inherent disadvantage when attempting to predict previous words. Hongler noted that the models consistently performed better when predicting what came next rather than what had come before—a trend observed across different languages. “Every layer of this architecture seems to be steeped in a ‘forward bias,'” he explained, indicating a universal inefficiency when it comes to backward predictions.

This discovery ties back to foundational principles laid out by Claude Shannon, a pioneering figure in information theory. Shannon’s 1951 analysis indicated that while predicting the next letter in a sequence and predicting the preceding one should theoretically present equal challenges, human performance leaned toward the forward direction. The researchers’ findings resonate with Shannon’s work, lending credence to the idea that humans and LLMs alike struggle more with backward prediction.

On examining the implications of this temporal bias, Hongler suggests that the causes may relate to how information is processed in language. Language inherently possesses a directional structure where causality is pivotal. For example, a sentence’s meaning often builds upon the progression of ideas; thus, understanding the subsequent meaning naturally unfolds with prior context. Conversely, discerning earlier meanings from later references demands a level of abstraction and inference that is inherently more complex, suggesting a significant link to the structure and dynamics of cognition, intelligence, and causality.

The ramifications of this line of inquiry stretch beyond mere linguistic curiosity. The researchers speculate that understanding the “Arrow of Time” principle could become a tool for identifying characteristics of intelligence itself. If LLMs reveal difficulties in reverse prediction that aligns with human cognitive struggles, this could signify a shared trait in how intelligent systems process information—an avenue that might lead to the development of more robust and effective LLMs.

Notably, Hongler and his colleagues stumbled upon these revelations while collaborating with a theater school in 2020 to create a chatbot capable of improvising alongside live actors. This unexpected journey highlighted a unique intersection between artistic expression and computational linguistics, illuminating how language intertwines with time, storytelling, and creativity. The initial goal—to enable a chatbot to reverse-engineer narratives with predetermined endings—sparked wider inquiries into the nature of language and its relationship to intelligence.

As we stand on the cusp of further exploration into language models, the findings regarding the Arrow of Time present rich potential for deeper inquiry. This study not only invites fresh perspectives on language modeling technology but also bridges gaps in our understanding of intelligence, cognition, and the very passage of time.

While the concept of time may remain a philosophical enigma, these revelations can provoke critical discussions about how we perceive and process language, ultimately contributing to the evolution of AI systems. The difference in predictive capabilities between forward and backward suggests that the path of development for language models might need to account for these biases, potentially driving innovations that could redefine machine understanding and interaction.

The exploration of LLMs in the context of the Arrow of Time is just the beginning. As researchers continue to unravel the complexities of language prediction, we may unlock new dimensions of cognition, opening pathways to enhanced AI that reflects a richer understanding of our own processing capabilities and the intricacies of time itself.

Leave a Reply